Dataset

This page provides details of our dataset and the supporting sources for downloading, such as annotations and object models. Additionally, we offer a selection of sample cases that showcase the human-human throw&catch activities featured in the dataset.

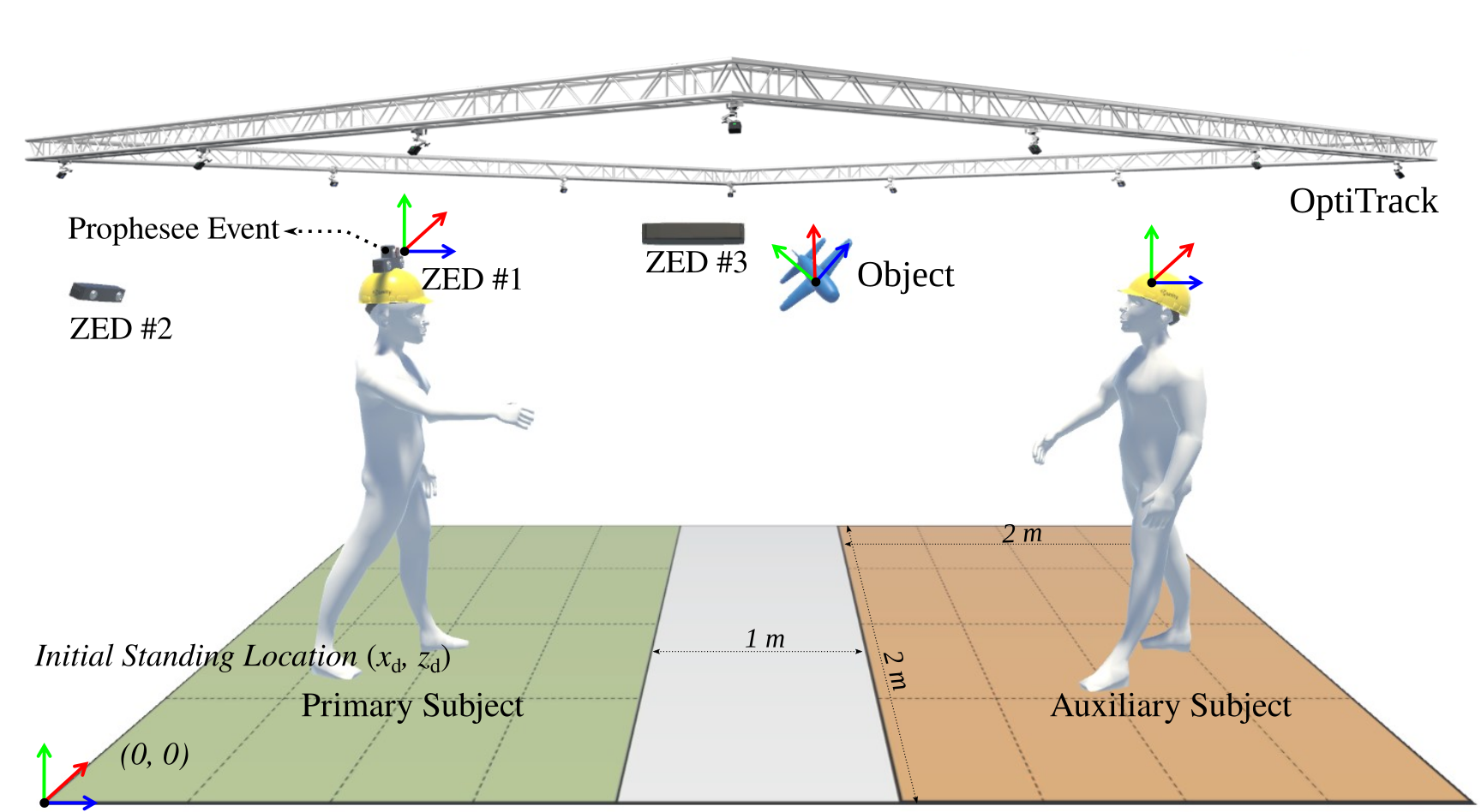

Briefly, as shown in the figure below, for each recorded human-human throw&catch activity, our dataset comprises multi-view synchronized RGB, depth and event streams. It is accompanied by a hierarchy of semantic and dense annotations, such as human body, hands and object motions.

Dataset Construction

The dataset is recorded in a flat lab area that closely resembles real-world throw-and-catch scenarios, featuring unstructured, cluttered, and dynamic surroundings, as illustrated schematically below. For comprehensive details on the dataset construction, we encourage users to refer to our technical paper and the associated GitHub repository.

|

In addition, our dataset also provides a hierarchy of semantic and dense annotations, e.g. the ground truth human hand, body and object motions captured with motion capture systems.

The Dataset in Numbers

Some facts about our dataset:

| Subjects | 34 subjects (29 males, 5 females, 20-31 yrs) |

| Objects | 52 objects (21 rigid objects, 16 soft objects, 15 3D printed objects) |

| Actions | Every two humans perform 10 random actions (5 throwing actions, 5 catching actions) |

| Recordings | 15K recordings |

| Visual Modality | RGB, depth, event |

| View | Egocentric, static third-person (side), static third-person (back) |

| Annotations | Human hand and body motion, object motion, average object velocity, human grasp mode, etc. |

All captured data, both raw and processed, are stored in Dropbox ![]() , complete with rich annotations and other supporting files. The data is organized in a hierarchical manner, accompanied by a variety of

supporting tutorials and files, such as object models. We encourage users to consult the data guide and our technical paper to understand the details of the data hierarchy and each stored data file in our dataset.

, complete with rich annotations and other supporting files. The data is organized in a hierarchical manner, accompanied by a variety of

supporting tutorials and files, such as object models. We encourage users to consult the data guide and our technical paper to understand the details of the data hierarchy and each stored data file in our dataset.

Additionally, we provide a collection of scripted tools to facilitate the usage, open maintenance, and extension of our dataset.

Sample Cases

To offer a quick overview of the dataset, we provide several sample cases here, which are available in Dropbox ![]() .

These samples include the recorded streams (both raw and processed) of six throw&catch activities involved in our dataset, as well as their annotation files. The data is organized hierarchically, as described earlier.

.

These samples include the recorded streams (both raw and processed) of six throw&catch activities involved in our dataset, as well as their annotation files. The data is organized hierarchically, as described earlier.

| Preview | Take ID | Object | Description |

|

001045 | Helmet | The auxiliary (right) subject threw a 'helmet' with the 'right' hand from the hand location ('right', 'chest') and the body location (1.17, 3.28), and then the primary (left) subject successfully caught the helmet with the 'both' hands from the hand location ('middle', 'chest') and the body location (0.21, 1.14). |

|

002870 | Magazine | The primary (left) subject threw a 'magazine' with the 'both' hands from the hand location ('middle', 'overhand') and the body location (1.2, 1.05), and then theauxiliary (right) subject successfully caught the magazine with the 'both' hands from the hand location ('middle', 'underhand') and the body location (1.54, 3.01). |

|

004521 | Apple (3D printed) |

The primary (left) subject threw an 'apple' with the 'right' hand from the hand location ('middle', 'chest') and the body location (0.15, 1.77), and then theauxiliary (right) subject successfully caught the apple with the 'both' hands from the hand location of ('middle', 'underhead') and the body location (1.71, 4.47). |

|

005915 | Beverage can | The auxiliary (right) subject threw a 'beverage can' with the 'left' hand from the hand location ('middle', 'chest') and the body location (1.13, 3.29), and then the primary (left) subject successfully caught the can with the 'both' hands from the hand location ('middle', 'chest') and the body location (0.92, 1.14). |

|

005616 | Bottled water | The auxiliary (right) subject threw a 'bottled water' with the 'both' hands from the hand location ('middle', 'overhead') and the body location (1.19,4.00), and then the primary (left) subject successfully caught the bottle with the 'both' hands from the hand location ('middle', 'chest') and the body location (0.36, 0.81). |

|

008683 | Doll | The primary (left) subject threw an 'doll' with the 'right' hand from the hand location ('middle', 'chest') and the body location (0.12, 1.75), and then theauxiliary (right) subject successfully caught the doll with the 'both' hands from the hand location ('middle', 'chest') and the body location (1.65, 4.56). |

Annotation

Our dataset offers a hierarchy of semantic and dense annotations, making it suitable for a variety of research applications, ranging from low-level physical skill learning to high-level pattern recognition. You can download and examine the sample annotations from Dropbox ![]() .

.

Briefly, each throw&catch activity in our dataset is labeled with:

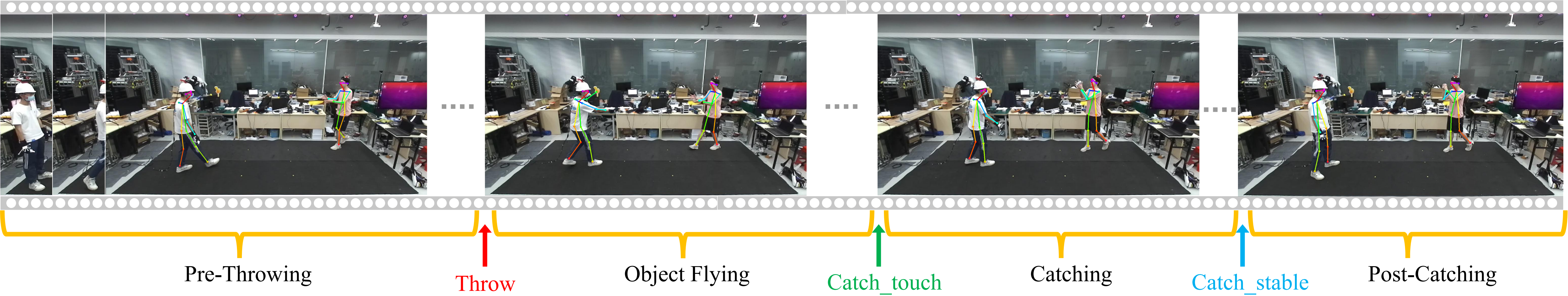

1. Human-object interaction states. As depicted below, each involved throw&catch activity is segmented into four phases, including pre-throwing, object flying, catching and post-catching, with three manually annotated moments including throw, catch touch and catch stable.

|

2. Human hand, body and object motions. The ground truth human hand joint motions, 6D body and object motions are recorded with high-precision motion capture systems, e.g. OptiTrack and MoCap gloves.

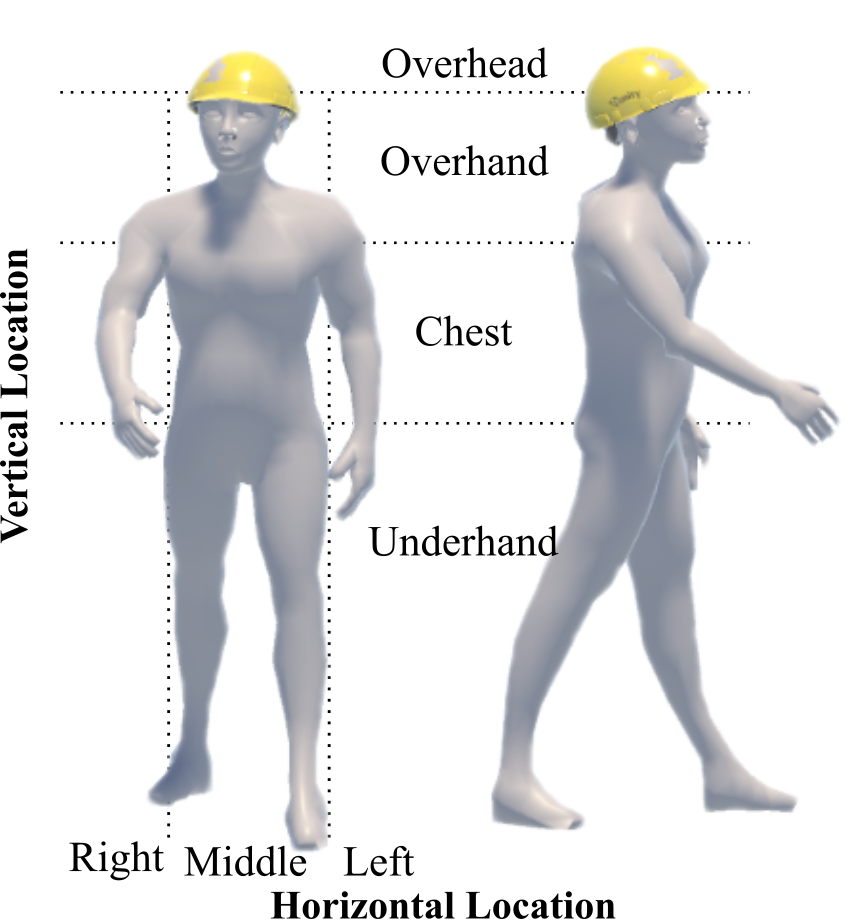

3. Other semantic and dense annotations.The human subjects' behaviors are manually inspected and annotated with symbolic labels, including grasp mode and hand locations during throw&catc, as shown below. Moreover, the subjects' exact standing locations and the average flying speed of the object are automatically annotated as quantitative labels.

|

The comprehensive annotation hierarchy is outlined below:

| Name | Description | Value | Labeling Type |

| Object | The thrown object | 'object_id' | automatic |

| Throw | The moment when the subject's hand(s) breaks with the thrown object during throwing | UNIX timestamp | manual |

| - Grasp mode | The subject's grasp mode to throw the object at the "throw" moment | {'left', 'right', 'both' } | manual |

| - Throw vertical | The vertical location(s) of the subject's hand(s) to throw the object at the "throw" moment | {'overhead', 'overhand', 'chest', 'underhand' } | manual |

| - Throw horizontal | The horizontal location(s) of the subject's hand(s) to throw the object | {'left', 'middle', 'right' } | manual |

| - Catch vertical | The vertical location(s) of the subject's hand(s) to catch at the "throw" moment | {'overhead', 'overhand', 'chest', 'underhand' } | manual |

| - Catch horizontal | The horizontal location(s) of the subject's hand(s) to catch at the "throw" moment | {'left', 'middle', 'right' } | manual |

| - Throw location | The subject's exact body location to throw at the "throw" moment | (x, z) | automatic |

| - Catch location | The subject's exact body location to catch at the "throw" moment | (x, z) | automatic |

| Catch_touch | The moment when the subject's hand(s) first touches the flying object during catching | UNIX timestamp | manual |

| - Catch location | The subject's exact location to catch the object at the "catch_touch" moment | (x, z) | automatic |

| - Object speed | The object's average speed during free flying | m/s | automatic |

| Catch_stable | The moment when the subject catches the flying object stably during catching | UNIX timestamp | manual |

| - Grasp mode | The subject's grasp mode to catch the object at the "catch_stable" moment | {'left', 'right', 'both' } | manual |

| - Vertical location | The vertical location(s) of the subject's hand(s) to catch the object at the "catch_stable" moment | {'overhead', 'overhand', 'chest', 'underhand' } | manual |

| - Horizontal location | The horizontal location(s) of the subject's hand(s) to catch at the "catch_stable" moment | {'left', 'middle', 'right' } | manual |

| - Catch result | The result on whether the object is stably catched by the subject | {'success', 'fail'} | manual |

For additional information about the annotations, we encourage users to refer to our technical paper and the GitHub repository of the dataset. Furthermore, we provide an annotation tool accompanied by a comprehensive technical tutorial, enabling users to annotate custom-captured data from a recording framework similar to or identical to ours.

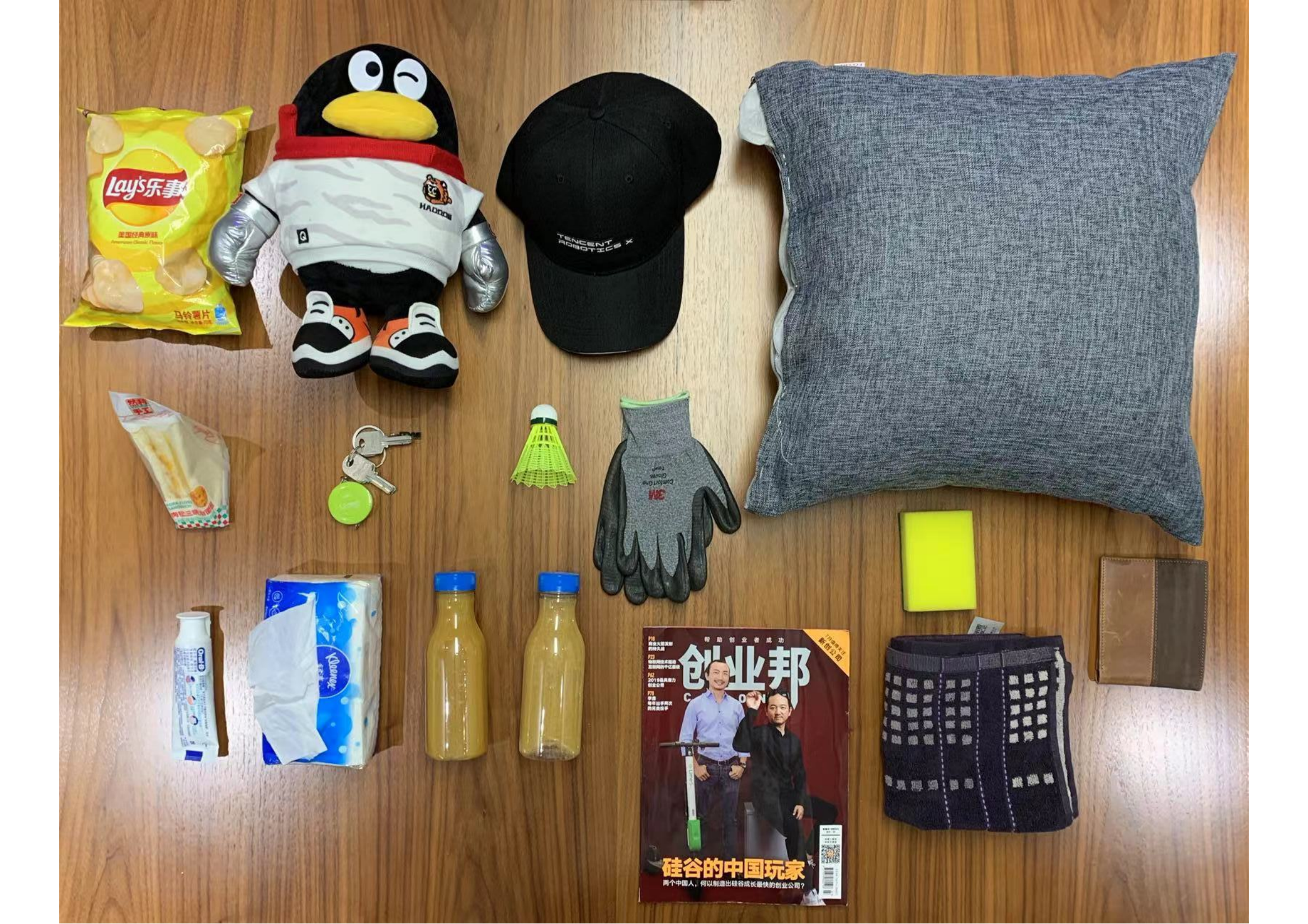

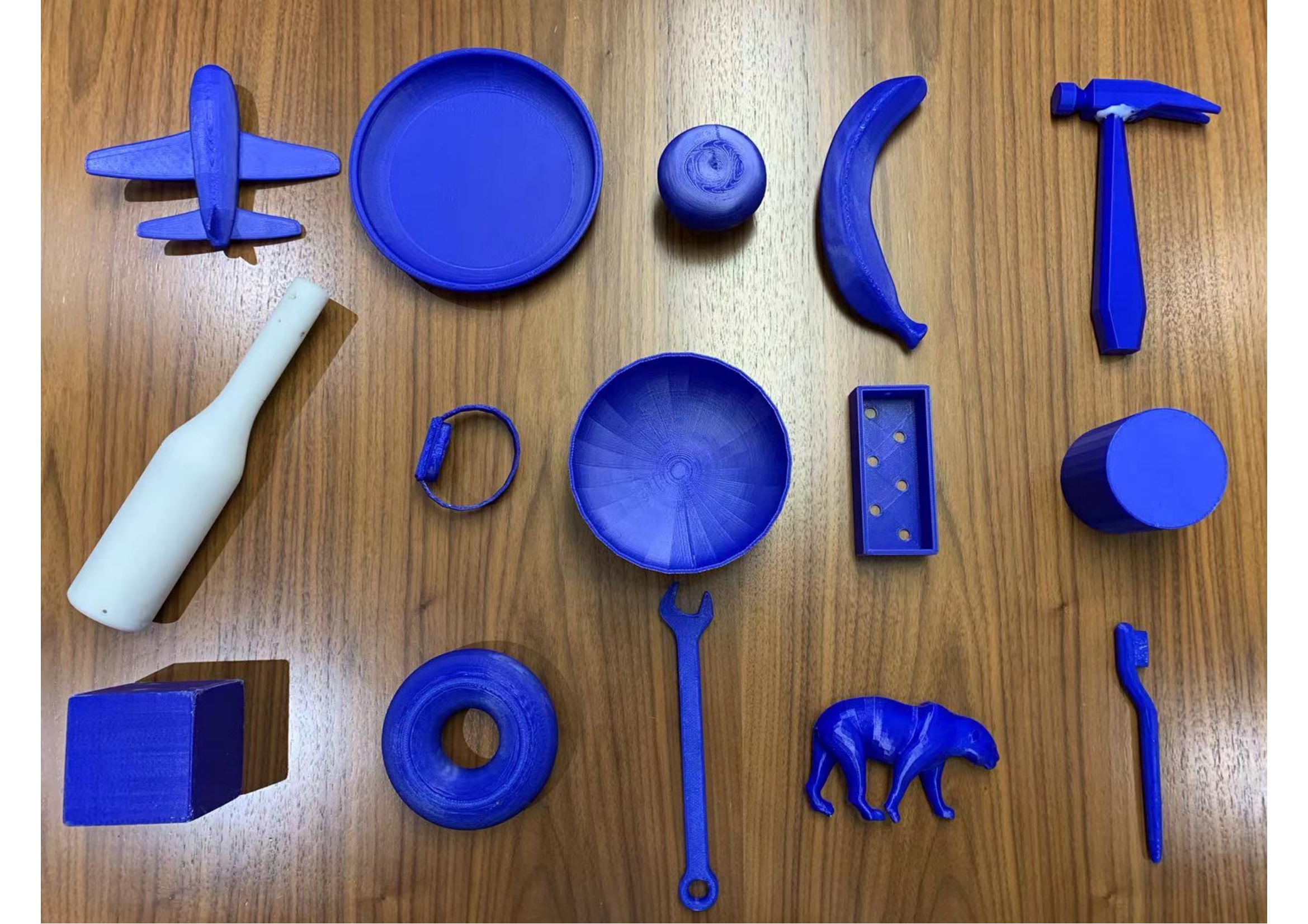

Objects

The dataset comprises a total of 52 objects, which can be broadly categorized into 21 rigid objects, 16 soft objects, and 15 3D-printed objects. These objects have been specifically selected as they are commonly found and manipulated in throw-and-catch activities within domestic and/or industrial settings. You can download the scanned object models from Dropbox ![]() .

.

|

|

|

| Rigid objects | Soft objects | 3D printed objects |

• License

All of our data is for academic use only. Any commercial use is prohibited!

By downloading the data, you accept and agree to the terms of