Recorder

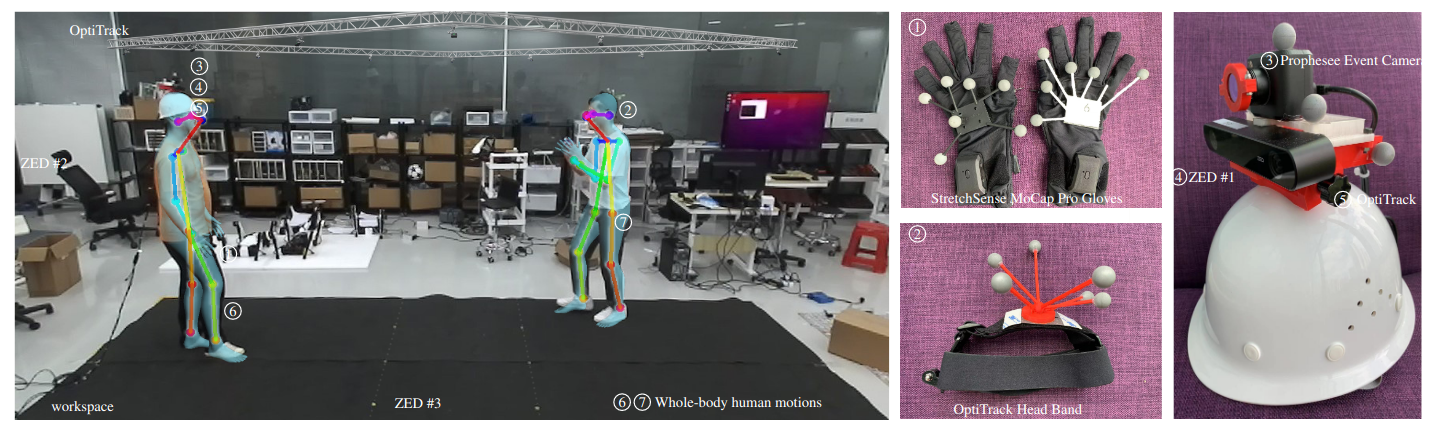

Our recording framework consists of a variety of high-precision motion track and visual streaming systems, which are bundled with a suite of ancillary devices, tools and customized scripts. The framework supports high-quality synchronized streaming, saving and visualization of human throw&catch activities from multiple sensors with varying sampling rates and data formats.

If you intend to record human demonstrations similar to ours, we provide step-by-step instructions and scripts in the dataset’s GitHub repository.

Device Details

Our recording framework utilizes multiple motion track and visual streaming systems, with their specifications briefly outlined below. We encourage users to consult our technical paper for a quick overview of their deployment and refer to the official product pages for detailed technical specifications.

| Device | Manufacturer | Recording Content | FPS | Resolution |

|---|---|---|---|---|

| ① Gloves | StretchSense MoCap Pro | Primary’s Hand Pose | 120 | - |

| ②⑤ Tracker | OptiTrack | 6D Head Pose | 240 | - |

| ③ Event Camera | Prophesee | Event | - | 1280x720 |

| ④ ZED Camera | Stereolabs | RGB-D | 60 | 1280x720 |

We implement the Precision Time Protocol (PTP) to synchronize their clocks with a precision of sub-milliseconds (approximately 0.3 ms). The maximum offset across data streams is ≤ 1 frame at 60 FPS, as assessed during manual annotation. We encourage users to consult the provided technical document in the GitHub repository, which offers a detailed explanation of how we process and synchronize each data modality.